South Korea Tells Startups...Don't Build Here

On a Tuesday morning in late January 2026, a founder in his late twenties sat in a Gangnam coffee shop, three blocks from the “Teheran Valley” tech hub.

His laptop was open to the enforcement decree issued by the Ministry of Science and ICT.

He wasn’t coding.

He wasn’t reviewing his sales pipeline.

He was using a calculator to figure out if he could afford a compliance officer.

The “Framework Act on the Development of the AI Industry” took effect on January 22, 2026. For his six-person startup, which used generative AI to build personalized education tools, the law felt less like a framework and more like a wall.

Under the new law, any realistic AI-generated content his platform produced now required visible labeling. His system qualified as “high-impact,” which meant mandatory impact assessments before deployment. Every high-impact algorithm required transparency documentation that his tiny team didn’t know how to write.

He looked at his bank balance. Six months of runway.

The quote from a local legal consultant sat in his inbox: 50 million won (about $35,000) for a basic compliance audit and framework setup.

He closed the laptop.

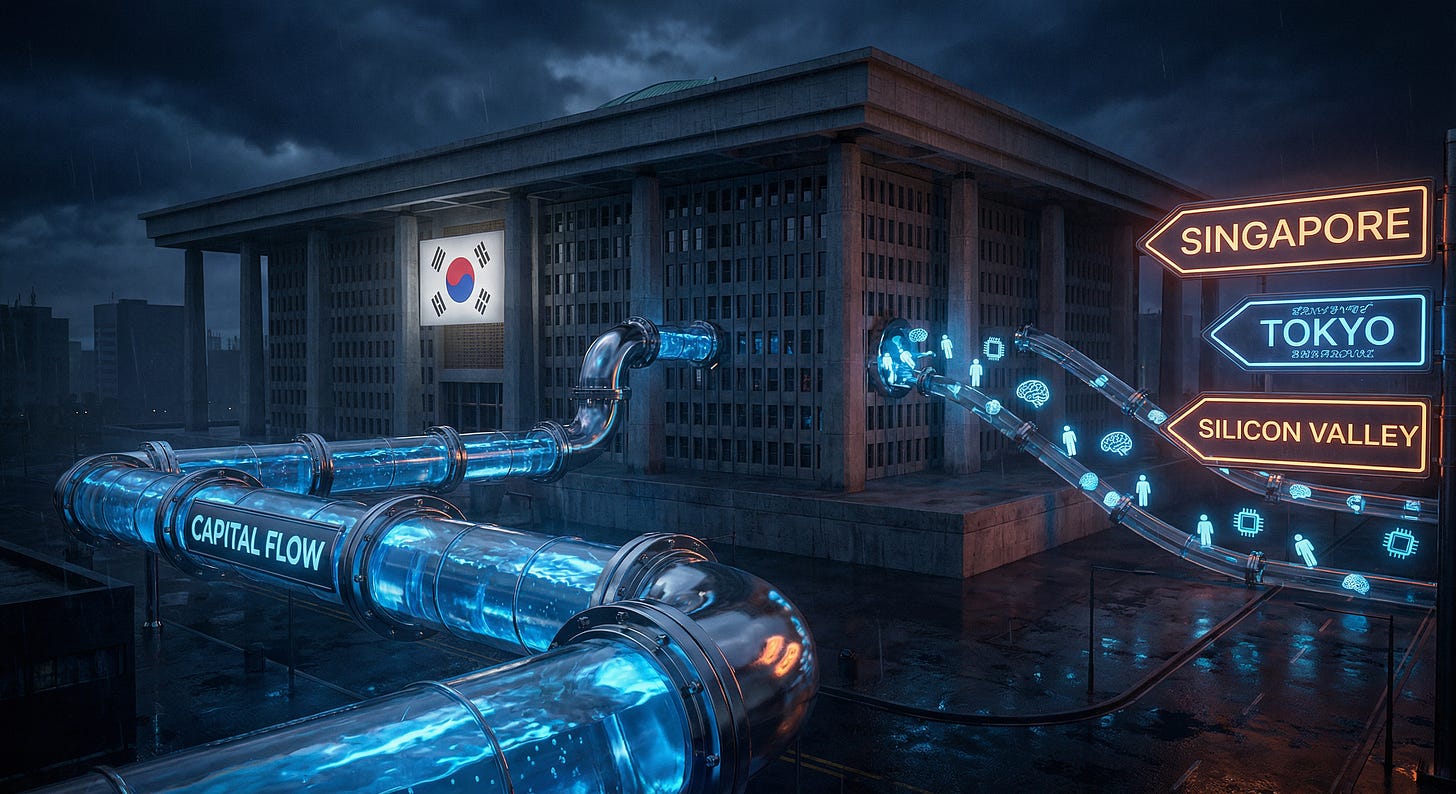

That week, he started looking at office space in Singapore.

South Korea has just become a global pioneer in AI regulation.

The country is now the second major economy, after the European Union, to implement a national AI law. The “Framework Act” is designed to balance the speed of innovation with the need for safety and trust.

On paper, it is a model law.

It mandates transparency, requires labeling of AI-generated media to fight deepfakes, and establishes a Presidential Council on National AI Strategy.

But there is a problem with the timing.

When the EU passed its AI Act, it was regulating a mature, trillion-dollar internal market. When the United States issues executive orders on AI safety, it is regulating the companies that built the technology.

South Korea is regulating an industry that barely exists yet.

According to data from DIGITIMES, South Korea accounts for only 1% of total global investment in AI startups. While Silicon Valley and Beijing engage in a multi-billion dollar arms race, Seoul is focused on the rules of the road before most of its drivers have even bought a car.

The Compliance Wall

The law is extensive. It mandates safety, and provides limited flexibility.

The official enforcement, which began in early 2026, carries specific obligations for “high-impact” AI systems. These are defined as systems used in healthcare, energy, public services, and criminal justice, areas where an AI mistake can change a life. For companies in these sectors, the law requires mandatory AI Impact Assessments and detailed safety documentation.

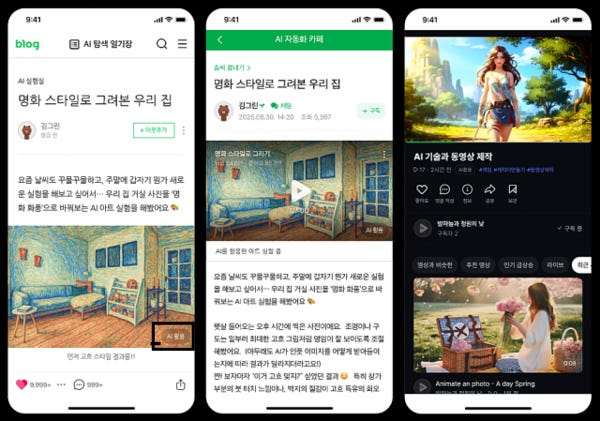

But the most visible change for the average user is the generative AI output labeling requirement.

Any AI-generated image, video, or voice that could be mistaken for real media must carry a visible or audible label. Clearly artificial outputs, like stylized artwork or animations, can use invisible watermarks or metadata instead. Following reports from OneTrust and PBS NewsHour, the visible labeling rules were a direct response to the explosion of deepfake fraud and political misinformation.

Seoul is focused on the rules of the road before most of its drivers have even bought a car.

For a global giant like OpenAI or Google, these requirements are a nuisance. They have hundreds of lawyers and engineers dedicated to “safety and alignment.”

For a Korean startup, the requirements are an existential threat.

A study reported by KoreaTechDesk during the lead-up to the law’s enforcement found that only 2% of Korean AI startups had formal compliance frameworks in place.

The government recognized this gap.

The Ministry of Science and ICT implemented a one-year “grace period” focused on guidance rather than penalties. During this window, which expires in early 2027, the government promises to provide expert consulting and cost-sharing programs to help firms catch up.

But a grace period is a stay of execution, not a pardon.

Startups are already calculating the “compliance tax.” Hiring a dedicated data officer or risk manager can cost upwards of 100 million won ($74,000) per year. In an environment where venture capital is becoming more selective, that is money that isn’t going into R&D or customer acquisition.

The Information Technology & Innovation Foundation was blunt in its assessment. In a September 2025 report, it warned that “one weak link could break” Korea’s AI policy. That link is the sheer weight of regulation on an ecosystem that is still in its infancy.

If you make it too expensive to start, nobody starts.

The Chaebol Trap

In South Korea, you cannot talk about the economy without talking about the Chaebol, the massive, family-led conglomerates like Samsung, LG, and Hyundai that dominate national life.

The AI law plays directly into their hands.

In August 2025, the government designated five consortia to lead the development of “Sovereign AI,” South Korea’s own foundation models meant to compete with OpenAI, Google, Anthropic.

The selections?

Naver Cloud, SK Telecom, LG AI Research, NC AI, and Upstage.

These groups received 200 billion won (~$150 million) in direct government support to build domestic AI capability. The Chaebols behind them already have the legal departments, the server farms, and the government relationships.

For Naver, a new regulation that requires an “AI Impact Assessment” is a standard Tuesday. For a three-person AI startup in Bundang, it is a reason to pivot to a different industry.

The Korea Startup Alliance has warned that this creates a high risk of “regulatory capture.”

When compliance costs are so high that only the largest incumbents can survive, the rules stop protecting the public and start protecting the monopoly. By setting the bar for “safety” and “transparency” this high, the government may be entrenching the dominance of the very conglomerates it claims to want to diversify away from.

If the only companies that can afford to be “safe” are the ones with 10 trillion won (~$7.5b) in the bank, then the future of Korean AI will be owned by the same people who owned the 20th century.

The survival data backs this up.

According to DIGITIMES, the survival rate for AI startups in Korea is just 56%. This was the number before the new law took effect.

When you add a mandatory compliance layer to an industry where nearly half of participants already fail, regulation stops being a guardrail.

It becomes a filter.

The Brain Drain

In late 2025, a small team of engineers stood in the departure lounge at Incheon International Airport.

They were the core of a computer vision startup that had been based in Seoul. They weren’t going on vacation. They were going to a co-working space in Chuo-ku, Japan.

“In Korea, the first question a lawyer asks is ‘Is this allowed?’” one of the engineers told a reporter for the Korea Herald. “In Tokyo or Singapore or Silicon Valley, the first question is ‘Does it work?’”

The Framework Act includes a specific requirement that has sent a chill through the international community: foreign AI companies with significant revenue or users in Korea must designate a domestic representative.

Specifically, any foreign firm with more than 1 trillion won in global revenue, more than 10 billion won in domestic AI revenue, or more than 1 million daily users in Korea, must have a physical legal presence in the country to handle safety and compliance issues.

This sounds reasonable for a giant like Meta or TikTok. But for a mid-sized US or European AI company looking to expand into East Asia, it makes Korea the most expensive market to enter in the region.

If a developer in Austin has to choose between localizing their app for Japan or Korea, and the Korean version requires a mandatory domestic representative and a government-approved impact assessment, they will choose Japan every time.

The result is a brain drain that works in both directions.

Korean talent is leaving to find less restrictive environments. International talent is staying away because the cost of entry is too high.

The Ministry of Science and ICT has tried to counter this by announcing a massive investment in human capital. They plan to foster 10,000 AI professionals annually and have tripled the AI R&D budget for 2026 to 10.1 trillion won (~$7.26B).

But government spending cannot buy culture.

If the state is spending $7 billion to train people, but those same people feel that the legal system is designed to punish their innovation, the government is essentially paying to train engineers for other countries.

High Stakes, Higher Friction

The legal gamble extends beyond startups. It is creating friction on the geopolitical stage.

South Korea sits in a delicate position between its security ally, the United States, and its largest trading partner, China.

The new AI law has already drawn scrutiny in Washington. The US Government’s AI Action Plan emphasizes a “US-led AI technology stack.” When a close ally like Korea creates its own unique, restrictive regulatory framework, it creates compatability problems.

If a US-made AI model has to be fundamentally re-coded or audited to meet Korean-specific “sovereignty” requirements, it creates a trade barrier. TechPolicy.Press reported that some US analysts believe Korea may “regret being first.”

The fear is that while Korea and the EU focus on building the most ethical AI, the US and China will simply build the most powerful AI.

By the time the safe models are ready, the race might already be over.

The Lessons of the 56%

Regulation is never neutral. It always has a bias toward the big and the slow.

The South Korean government believes that by setting high standards now, they will attract global investment from companies that want a “trustworthy” ecosystem. They point to the “National Growth Fund,” a 100 trillion won ($72B) public-private pool, as proof that they are putting their money where their mouth is.

They are betting that in 2030, the world will be tired of “move fast and break things” AI.

They believe companies will flock to the jurisdiction that has the clearest, safest rules.

Unfortunately, hopes and dreams don’t pay for AWS credits.

The reality today is that 45.5% of all startups funded in Korea in Q3 2025 were AI-focused, according to KoreaTechDesk. Total venture investment hit 4 trillion (~$3b) won that quarter, the strongest in four years.

The energy is there.

The talent is there.

But the 56% survival rate is a warning.

If Korea wants to be a global AI power, it needs more than a 10 trillion (~$7.5b) won R&D budget and a deepfake labeling law. It needs to ensure that the “Framework” doesn’t become a cage.

Early results from 2026 suggest a split outcome.

The Korea Herald reports that “Big Tech” is betting on Korea, drawn by the government’s massive subsidies for the Chaebol consortia. But at the same time, the “Uneasy Confidence” among smaller founders reported by KoreaTechDesk suggests the grassroots are wilting.

The gamble is simple: Korea is trading innovation for order.

If they are right, they will be the ethical hub of Asian AI.

If they are wrong, they will have spent $7 billion to build a museum for an industry that moved to Singapore.

For the founder in the Gangnam coffee shop, the answer isn’t in a government report.

It’s in the six months of runway he has left.

And as the grace period clock ticks toward 2027, the most important AI model in Korea isn’t ChatGPT or HyperCLOVA X.

It’s the one calculating whether it’s cheaper to comply, or cheaper to leave.

Curious Jay is weekly essays on why things don’t work the way they should. Subscribe free for investigations into power, money, and broken systems. Go paid for the full archive.

Sources: